Book 6 of 2026: Scales by Christopher Hinz.

Fourth Philip K. Dick award nominee of the year. As such, not reviewed.

Enthusiastically Ambiverted Hopepunk

I read…a lot. Here’s where I ramble about books and printed media.

Book 6 of 2026: Scales by Christopher Hinz.

Fourth Philip K. Dick award nominee of the year. As such, not reviewed.

Book 5 of 2026: Outlaw Planet by M. R. Carey.

Third Philip K. Dick award nominee of the year. As such, not reviewed.

Book 4 of 2026: City of All Seasons by Oliver K. Langmead and Aliya Whiteley.

Second Philip K. Dick award nominee of the year. As such, not reviewed.

Book 3 of 2026: Casual by Koji A. Dae.

First Philip K. Dick award nominee of the year. As such, not reviewed.

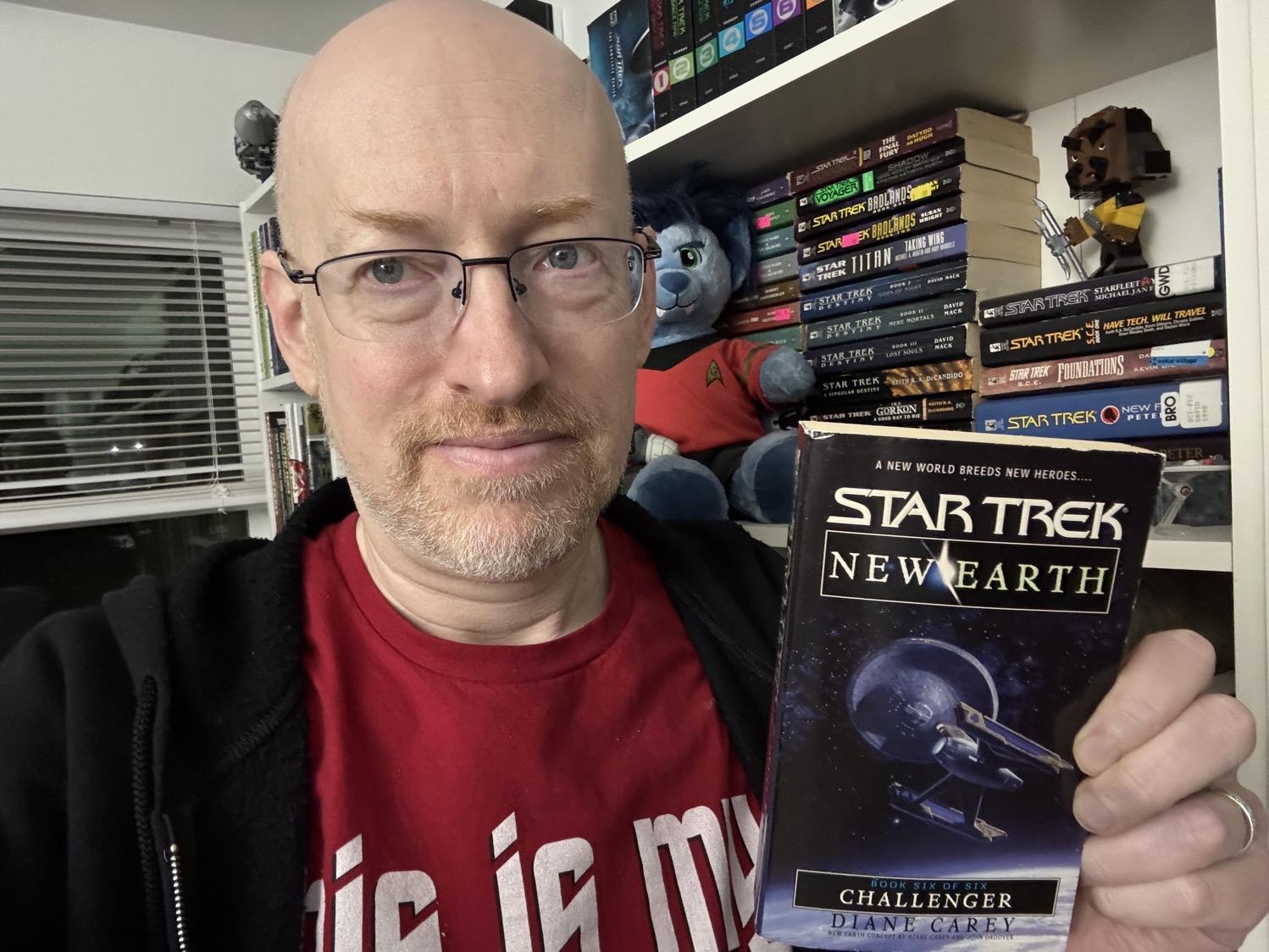

Book 2 of 2026: Challenger by Diane Carey: ⭐️⭐️⭐️.

The tone of this one was really odd. Much of it is centered on the main crew of another ship, which is fine in and of itself, but there was something about their interactions that was so flippant and irreverent that for me, it blew right past “different ship with a different, quirkier feel than the Enterprise” all the way to “how are these people functioning with each other, within Starfleet, or in the universe in general?” As a capstone to the series, it wrapped up all the major plot points well enough, but the odd tone was really off-putting for me.

Happy holidays (part two)!

Well, we wrapped up 2025…and as happy as we were to see 2025 end, 2026 is already looking to keep the dumpster fires burning bright.

At work, the week was fine. Back in the office this week, though as it was still in the holiday break, it was another pretty slow week. Next week classes start, so things will pick back up again. The slowdown is always nice, but it’ll also be good to have things back to normal after the holidays.

Here at home, we had a nice quiet New Year’s Eve. It was even a bit quieter than we expected, as there weren’t as many local unsanctioned fireworks as there have been in years past.

Out in the wider world, though, we all woke up one morning just a few days into the year to discover that the US had invaded Venezuela and abducted its president and his wife. Because…sigh. We are continuing to speed run becoming everything as a country that I was brought up being told that we weren’t. And even though the older I get and the more I learn, the more obvious it is how far we always have been from the ideals we claimed to uphold, it’s still mind-boggling to be where we are now.

As I said on Mastodon: “I’m confused: Is being a brown-skinned person accused of being involved with drugs something that gets you kidnapped and forcibly kicked out of the country or kidnapped and forcibly brought into the country?”

Though really, after what we saw of Trump in his first term and so far in his second, the only thing that’s really surprising me about all of this is how many people are just…going along with it (most notably Congress — especially, but not at all limited to, the Republican party — and the Supreme Court). The system of checks and balances has apparently given up trying to either check or balance, and that’s perhaps the most troubling part of all of this.

This week I recorded my responses to the current SFWA survey on AI use in the SFF writing/publishing industry, did my annual reading wrap-up for the year, and posted my resolutions for this year.

Finished my last book of the year, Rough Trails by L.A. Graf, and my first book of the year, Thin Air by Dean Wesley Smith and Kristine Kathryn Rusch, both parts of a six-book TOS-era Star Trek series.

We watched two movies over the weekend:

Mission Impossible: The Final Reckoning (2025): ⭐️⭐️: A particularly disappointing finale to the series.

NOTE: For regular readers (assuming there are any), a bit of clarification on how I link some items: I use archive.is for pages on sites that are paywalled (including sites that will only show content if adblockers are disabled) and for Substack pages (because Substack is another Nazi bar). Starting with this week’s post, I will also include links to the original pages, as not everyone has the same attitudes as I do about these things and may have subscriptions to the sites in question, not be as annoyed as I am at dealing with paywalls, ads, and the like, or have either accepted Substack as a “necessary evil” or are unaware of their problematic practices.

National Society of Tax Professionals: USPS Announces Changes to the Postmark Date System: “…while a postmark confirms the USPS possessed a mail piece on the date inscribed, that date does not necessarily align with the date the USPS first accepted possession of the item.” Potentially impactful in a number of important scenarios, including voting by mail. Undated informational page, but the rule took effect in November 2025.

Foz Meadows: Against AI (archive.is link of Substack original): “AI is unethical on a scale that SFF authors should be uniquely placed to appreciate, its evils mirroring metaphors that are older than our present civilization. AI is the cursed amulet, the magic mirror, the deal with the devil, the doppelganger that learns our secrets and steals our face; it’s a faerie illusion, leprechaun gold, the fox’s trick that gives rot the look of resplendence, the naked emperor parading with his cock out; it’s the disembodied voice that whispers let me in, the zombie virus that transforms the known into the unrecognizable, the corrupting fungi whose tendrils invade and poison. It’s the literal fucking One Ring, telling us that of course we’d use its power for good, compelling us to pick it up so that through us, it might do great evil.”

Chuck Wendig: My Open Letter to That Open Letter About AI in Writing and Publishing: “AI IS NOT INEVITABLE. ¶ The only strategy here is the sum total pushback against its uncanny horrors and its non-consensual intrusion into every corner of our world — it steals our content, guzzles our water, increases our power bills, is crammed into services we didn’t ask for it to be crammed into while also charging us more money for the “privelege.” There is no strategy here except to find the fields where the AI grows and metaphorically set them aflame. ¶ And shame and anger against corporate overreach is a powerful fire.”

Trekorama!: 3D walkthroughs of locations on various Star Trek ships, including the Enterprise 1701 (main bridge), 1701-D (main bridge, engineering, sick bay, Ten-Forward, transporter room, Picard, Data, Troi, and Worf’s quarters, and a shuttle), 1701-E (bridge), and Kelvinverse version (bridge and corridor), Defiant (deck one), Voyager (deck one, sickbay, transporter room, engineering, mess hall), Discovery (bridge, transporter room, mess hall, and corridor), and Klingon Bird of Prey (bridge), plus the real-world ISS.

David Reamer at the Anchorage Daily News: Termination dust: Its history, evolution in meaning and possible origin (archive.is link of a paywalled original): “…the history and evolution of termination dust as a turn of phrase offers education, enlightenment and entertainment. Over the decades, there have been changes in meaning and connotation. Throughout those years, it remains a significant detail of local history, a widely recognizable bit of slang whose lore maps closely against that of the town itself.”

Robin Young and Emiko Tamagawa at WBUR: ‘Wake Up Dead Man’: Rian and Nathan Johnson on blending mystery and faith in new ‘Knives Out’ movie: Brief but interesting interview touching on the religious motifs in Wake Up Dead Man.

John Scalo: Was Daft Punk Having a Laugh When They Chose the Tempo of Harder, Better, Faster, Stronger?: “I think our helmet-clad robot friends might have been making a little joke that we’ve apparently all missed. The BPM of Harder, Better, Faster, Stronger is actually 123.45.” Fun bit of music trivia, plus a bit of a peek at the difficulties of having a computer do something that seems relatively easy for humans; in this case, determining a song’s tempo.

Book 1 of 2026: Thin Air by Dean Wesley Smith and Kristine Kathryn Rusch: ⭐️⭐️⭐️.

Another decent book in the series, with another crisis for the Enterprise to solve and the colonists to endure. I’m starting to wonder if they’ll actually be able to wrap up all the dangling threads in just one more book.

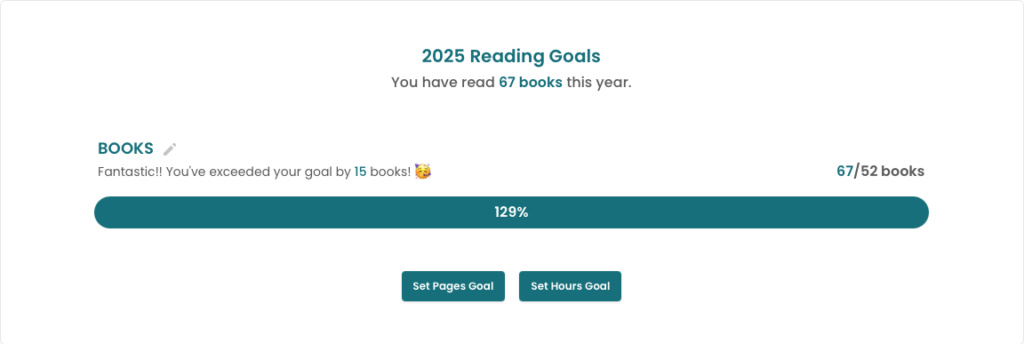

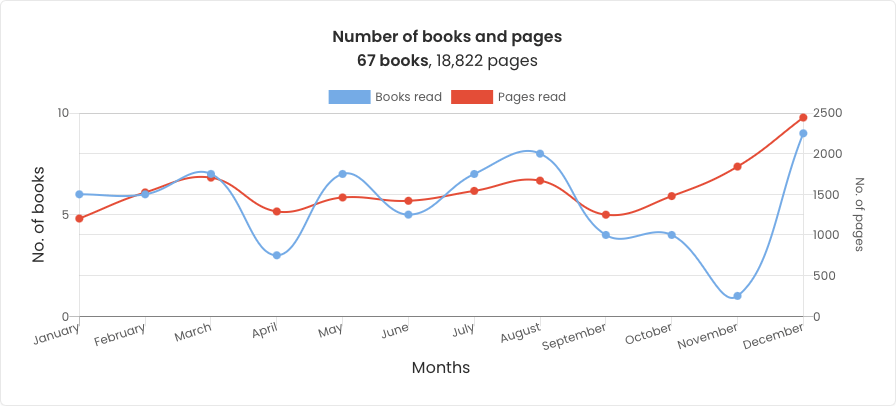

Every year, I set myself a goal of reading at least 52 books over the course of the year — an average of one a week. This year I made it to 67 books. Here’s a quick (not really) overview…

And again, the trend of the last few years holds true, with another year of primarily escapist fluff. Surprised? I’m not. Have you seen…everything? Still?

Non-fiction: Four books, counting for 6% of my reading, but I liked all four of them. On a historical bent, there was the World War II-era United States Office of Strategic Services Simple Sabotage Field Manual and Lucy Worsley’s A Very British Murder, John Tenuto and Maria Jose Tenuto’s Star Trek III: The Search for Spock: The Making of the Classic Film bridged the non-fiction world with my Star Trek fandom, and on a technical bent, Thom Hogan’s Complete Guide to the Nikon Z5II is a deep dive into the intricacies of my new camera.

Non-genre-fiction (where “genre” is shorthand — though not that short, if you include this parenthetical — for science fiction, fantasy, and horror): None, or perhaps almost none; the Nick Mamatas-edited anthology 120 Murders: Dark Fiction Inspired by the Alternative Era has a few speculative fiction entries, but as a whole probably wouldn’t be classified that way, so can count for this category.

Quality genre fiction: About the same as last year; primarily the Philip K. Dick nominees and my Hugo project, with a few others added here and there.

As usual, I read all of the books nominated for this year’s Philip K. Dick awards. However, I’m no longer posting my thoughts or review on the nominees, as I am the coordinator for the Philip K. Dick award ceremony at Norwescon. While I have no input into selecting any of the nominees or the eventual winner, I don’t want to give any appearance of impropriety. So, I’ll just read and enjoy each year’s nominees, and you all will have to make your own judgements as to your favorites.

I only added four books to my Hugo reading project, and one was somewhat accidental. Only getting through four was due to a few factors, including deciding to read the entirety of Martha Wells’s excellent The Murderbot Diaries series before attending this year’s Worldcon here in Seattle where she was a guest of honor (this also accounted for my “accidental” read, as I’d forgotten that Network Effect was a Hugo Best Novel winner), and slogging my way through Susanna Clarke’s Jonathan Strange & Mr Norrell, which took slightly over a month. Of the four I read this year, Network Effect was my favorite.

Outside of those two categories and in addition to reading all of the aforementioned Murderbot series, I also finished Lois McMaster Bujold’s Vorkosigan Saga, read two of the books in Bujold’s World of the Five Gods series (one of which, Paladin of Souls, is another Hugo Best Novel winner), and read both books of Catherynne Valente’s Space Opera series. All of those were good. Apparently it was a series binge sort of year.

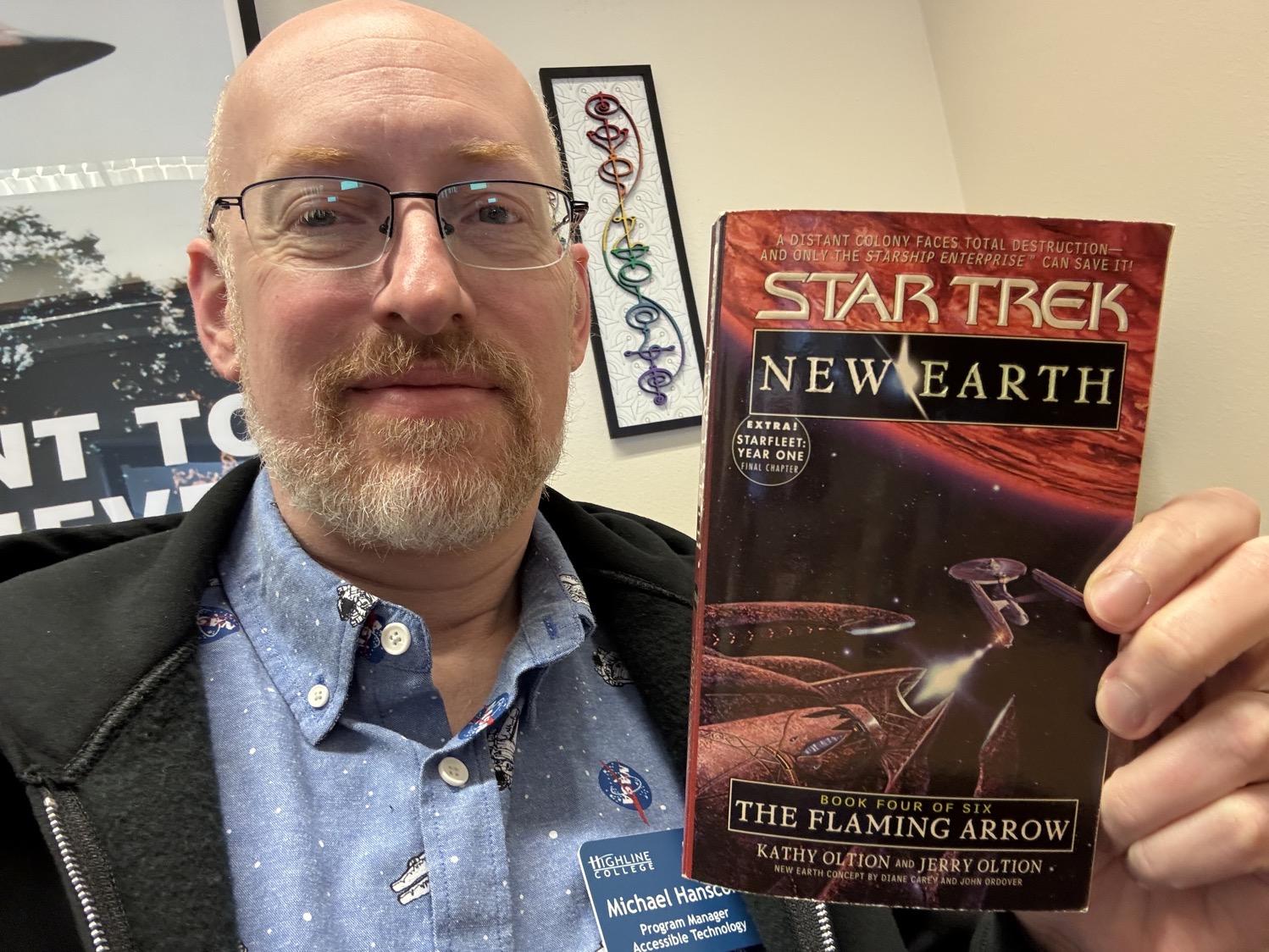

Fluff genre fiction: Unsurprisingly, this once again ended up being the strong majority of this year’s reading. Lots of Star Trek novels, with a few detours here and there. And given everything that was going on in 2020 2021 2022 2023 2024 2025, it was very nice to have a bookshelf full of options that wouldn’t take a whole lot of brain power for me to disappear into. As I’ve now read most of the TOS books that have been released, most of this year’s reads were TNG-era, but I’m closing out the year with the TOS-era six-book “New Earth” series (again with “series” being something of a theme this year). That series will also start my 2026 reading, as I only got through four of the six before the end of 2025.

I’m still subscribing to two SF/F magazines (Uncanny and Clarkesworld), though I’ve slacked off on actually reading them for the past few months — not due to anything with the magazines themselves, but just because I’ve been more in the mood to work on emptying out my physical “to read” shelves. I’m not sure how much magazine reading I’ll do this coming year, but I will be continuing to subscribe, as I want both of these magazines to continue publishing, and it’ll be nice to have some back stock to dive into when I’m ready (or when I’m traveling, since these are both electronic distributions).

Finally, Storygraph’s stats on my year’s reading tell me:

On to 2026!

Book 67 of 2025: ⭐️⭐️⭐️

Almost a four-star, due to a particularly imaginative doomsday weapon that really had me lost as to how they were going to technobabble their way out of it. Settled on three, though, as it is a “middle book” that doesn’t stand alone on its own. Still, a more engaging entry than many middle books end up being.

While I’m not an SFWA member or even an author (beyond this little blog), as a lifelong reader of science fiction, I figured it was worth a few minutes to add my response to their current Survey on LLM Use in Industry. For the sake of posterity, here are my responses:

(Note: Some time ago there was an online tool to look up sources that were used in one of the earlier revisions of one of the larger LLMs; I don’t currently remember who offered the tool or the specifics of the training database being reviewed. I do remember that both this blog and the Norwescon website, of which I’ve been both author and editor of much of the content for the past 15 years, were included in the training database.)

How has your writing practice changed since the emergence of Generative AI and related LLM integrations?

(Note: The first and fourth of these options seem to contradict each other. For clarity, I either disable or, if it can’t be disabled, actively avoid using generative AI; as noted in the sidebar of this blog, I do use machine-learning/LLM-based tools such as speech-to-text transcription, but when I do, I check and edit the output for accuracy.)

Which of the following most closely resembles your position on the use of Large Language Models (LLMs) in the writing process?

My actual answer is probably somewhere between the first (no ethical use-case) and second (recognizing LLM use in some tools) options.

One of the biggest problems with the current discussions (including this survey and in the File770 threads started off of Erin Underwood’s open letter) is the grouping of several related but distinct technologies under the banner term of “AI”.

Machine learning and LLM-backed analysis models are one thing. These are the technologies that have been used for years in many different contexts, including (some) spelling and grammar checkers, speech-to-text transcription, simple text-to-speech generation (suitable for screen readers and similar applications, not for audiobook creation), medical analysis, and so on. These are analytical or simple transformative, not creative, processes. In all cases, though, the output should be reviewed and checked by humans, not accepted as-is by default.

Generative AI (genAI) is the severely problematic aspect, for all the reasons mentioned by many, many people advocating for its avoidance (the many unethical practices in the creation and ongoing use of the technology, social and environmental costs, high error rates, and many more).

It’s unfortunate that all of these aspects are now grouped together as “AI”, as it makes it nearly impossible to approach the subject with any amount of nuance. I suspect that was what Ms. Underwood was attempting to do, though, as she also falls victim to the same confusion, she sorely missed the mark (and has continued to do so in her responses).

As a reader, I would be very disappointed to see the Nebulas (and any other award) accepting the use of genAI in the creation of nominated (let alone awarded) works.

(Note:: I wrote about the machine learning vs. genAI confusion on this blog earlier this year.)

What forms of guidance do you think would most benefit writers trying to navigate the growing presence of LLMs in our industry?

Clarity in defining the differences among the technologies and determining which may be acceptable (such as speech-to-text transcription, spell/grammar checkers, etc.,) depending on the technology and its use, and which are unacceptable (genAI for text or art creation).