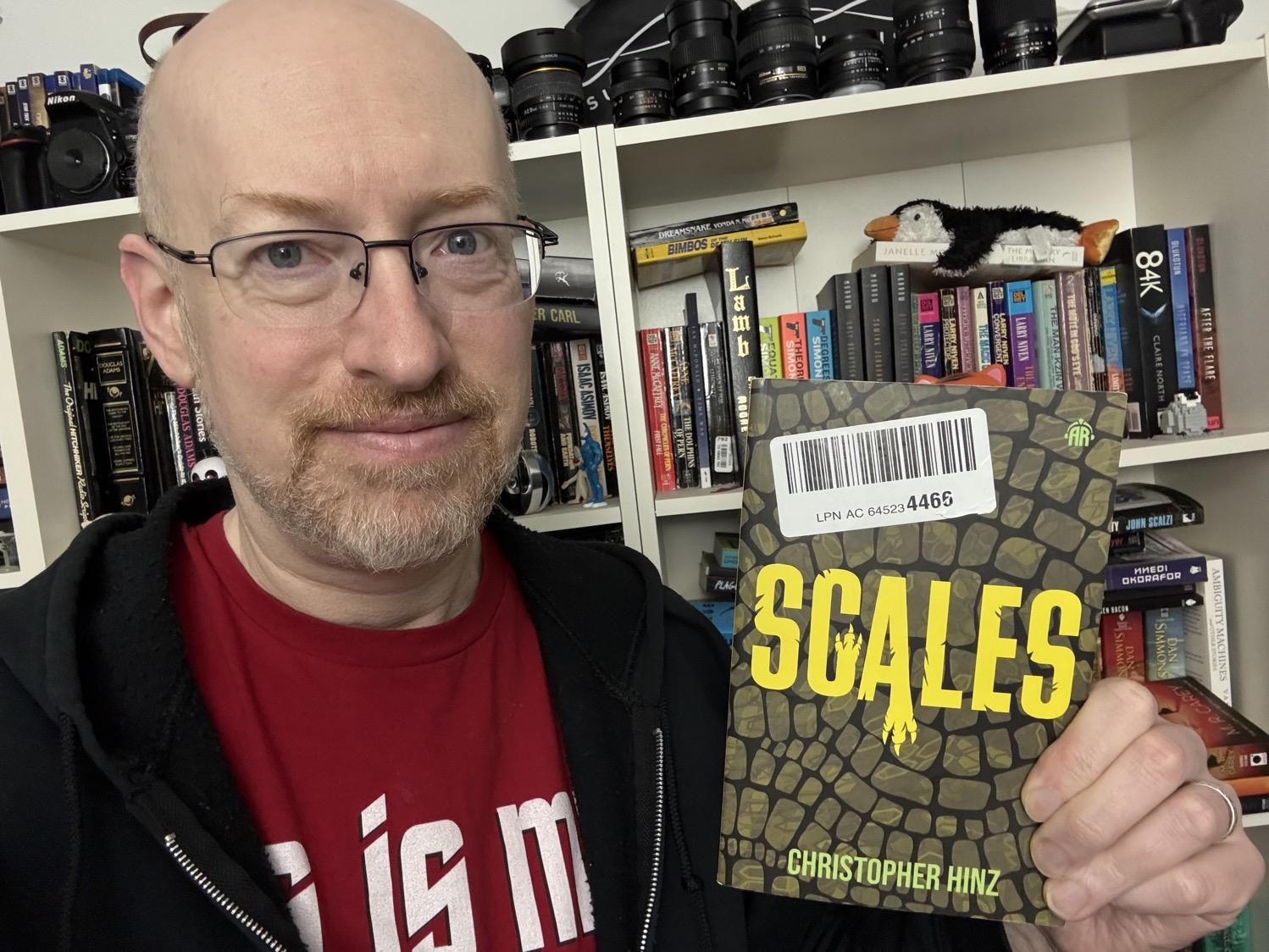

Book 6 of 2026: Scales by Christopher Hinz.

Fourth Philip K. Dick award nominee of the year. As such, not reviewed.

Enthusiastically Ambiverted Hopepunk

Whatever I’m geeking out about at the time.

Book 6 of 2026: Scales by Christopher Hinz.

Fourth Philip K. Dick award nominee of the year. As such, not reviewed.

A random selection of lower-tempo (sub-100bpm) favorites. Just getting back into the swing of things after many months away. Got a gig coming up again, so time to get some practice in! There are a couple definite goofs in here; just picture everyone in the club turning to good-naturedly point and laugh at the DJ before going back to dancing. :)

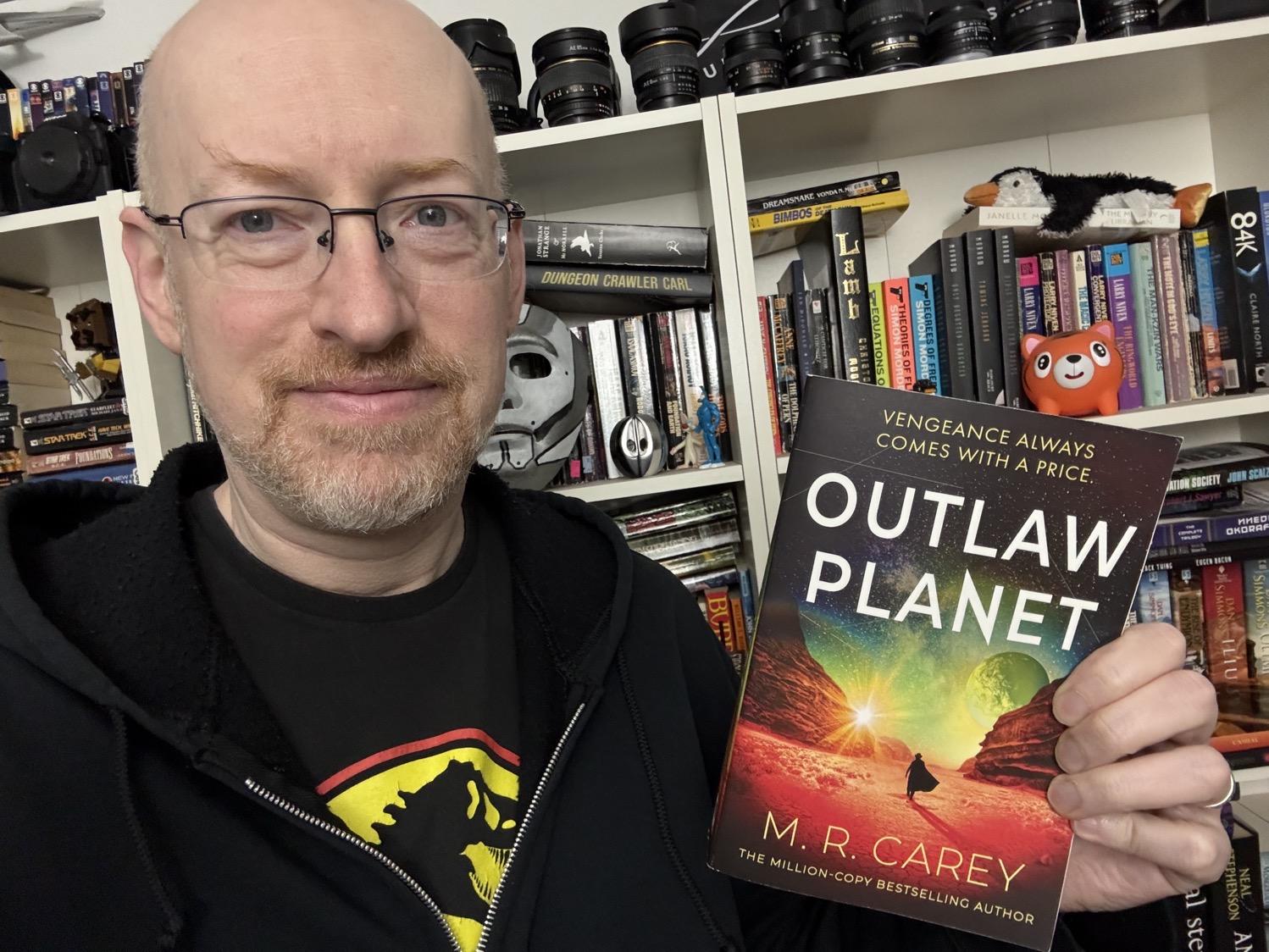

Book 5 of 2026: Outlaw Planet by M. R. Carey.

Third Philip K. Dick award nominee of the year. As such, not reviewed.

Once again, I missed a week. These things happen! So here we are.

The biggest bits of the last two weeks at work were two afternoons on Zoom for the winter DSSC conference, connecting with disability services workers across the state’s college system, and finishing my annual performance review. Both went well, and for the latter, everyone still likes me, and I have a good set of goals for the next year.

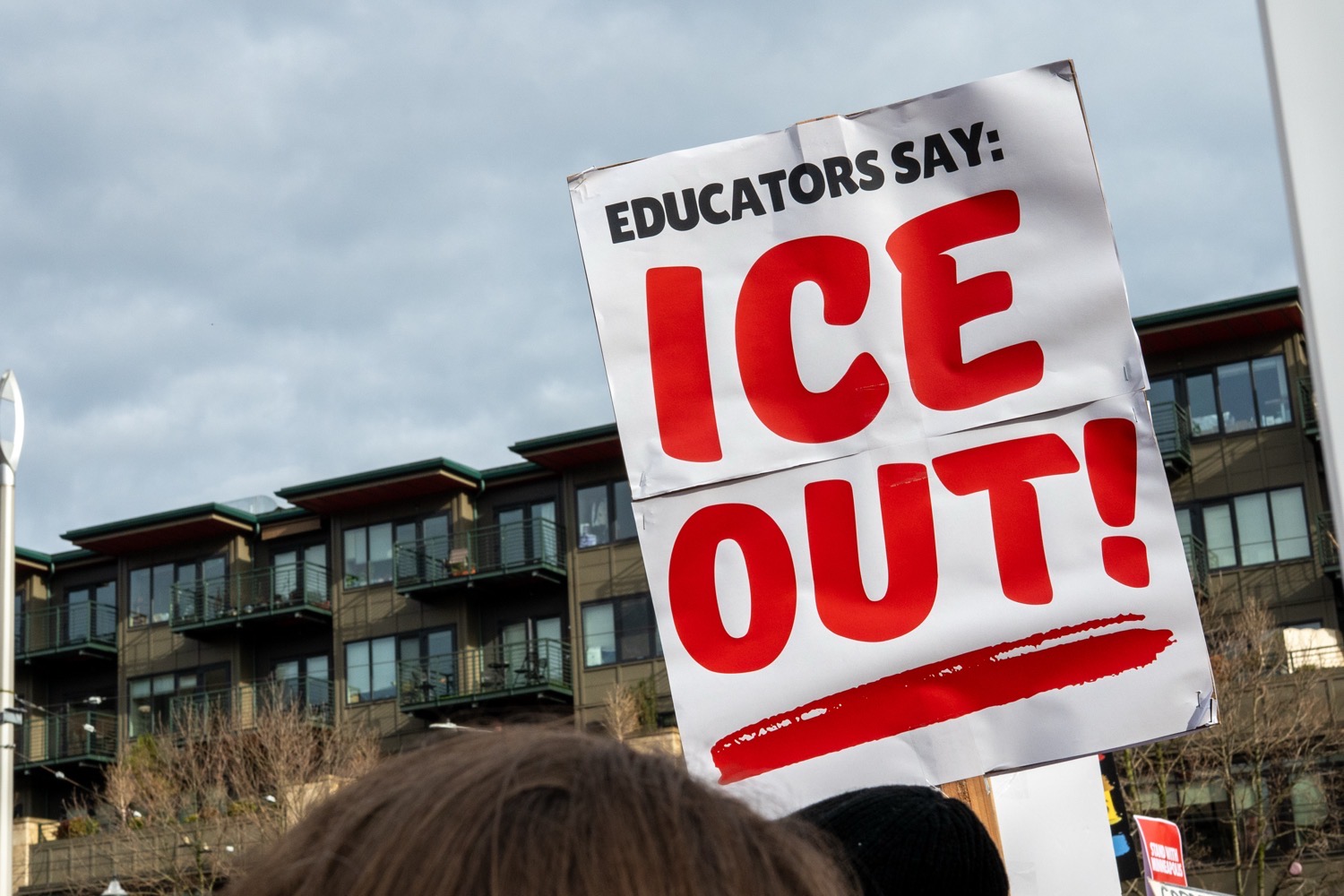

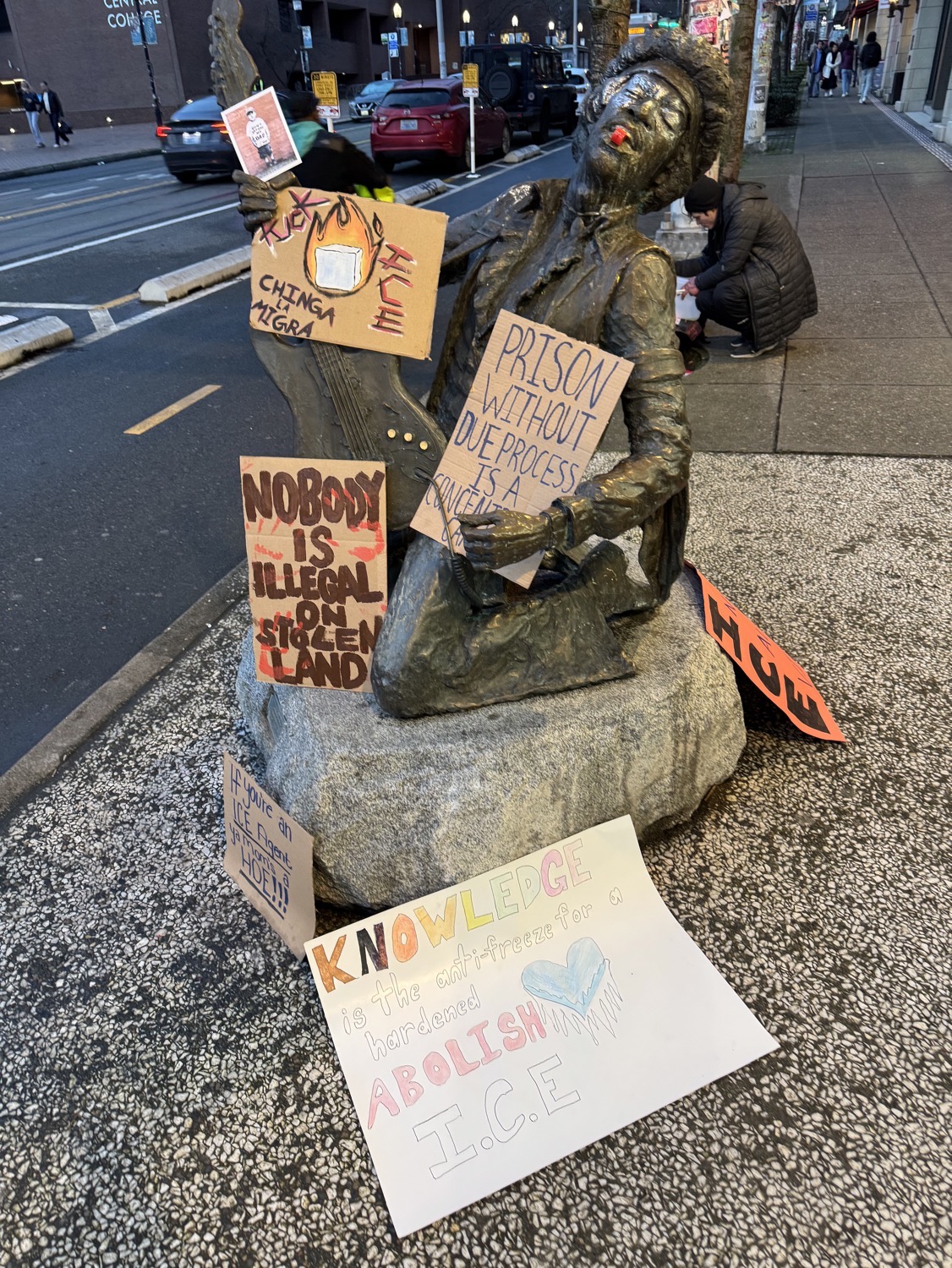

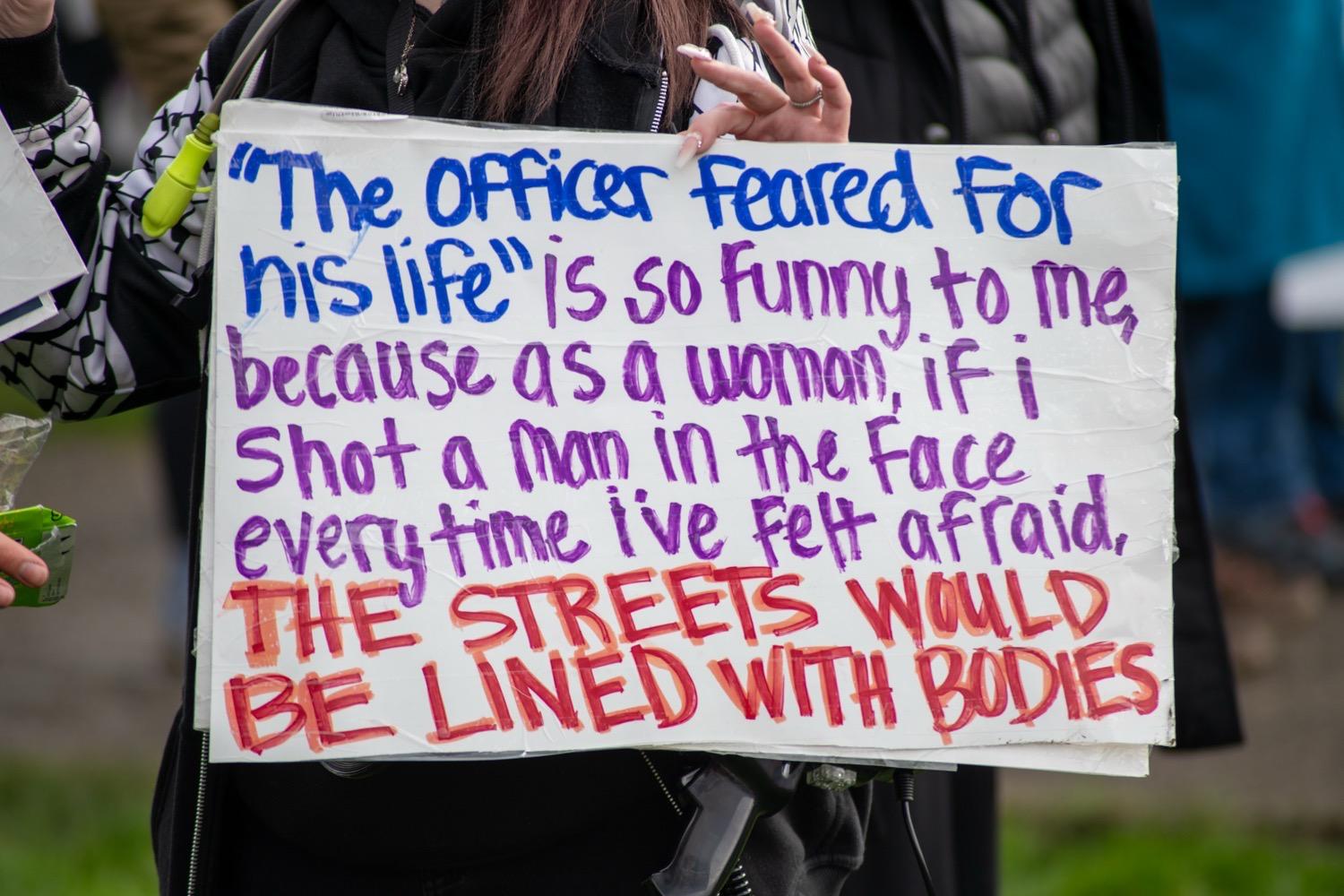

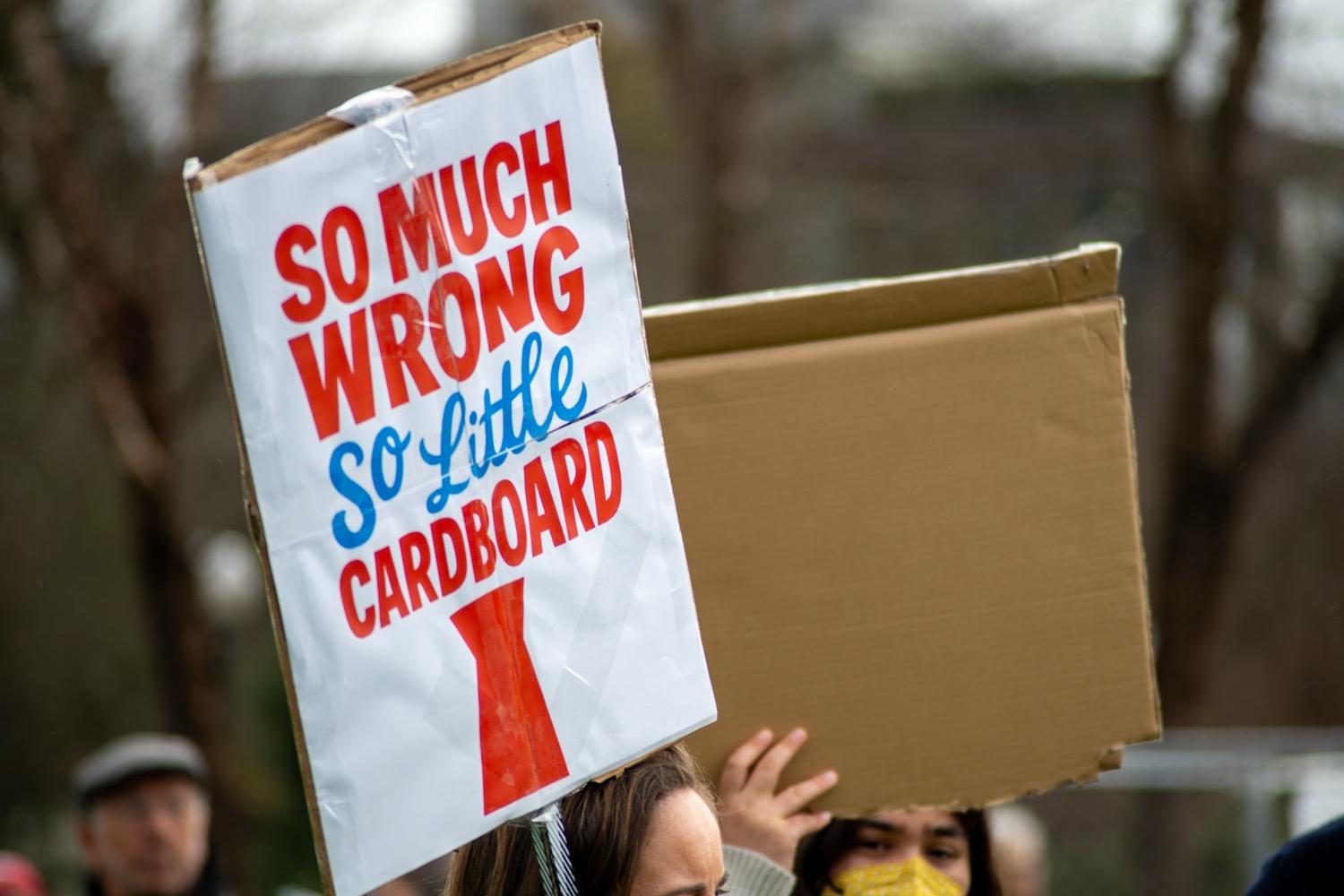

On the home front, our big adventure last weekend was heading into Seattle on Saturday for an(other) anti-ICE protest. This one was primarily organized by Seattle-area higher education unions, and was then joined by health care and tech unions. Ended up being larger than we expected at first, with a rally at Seattle Central College and then a march down to the Federal Building. No clashes, no issues, and a good gathering of like-minded educators (including a co-worker who came along with us), healers, techies, and whomever else wanted to join in. Photos are in this Flickr album.

I’d already had plans to head out to the Mercury to get some goth clubbing in that night, so rather than having me drive back and forth from home to protest to home to club to home, we just got a hotel room nearby. After the protest we got set up in our hotel room, had dinner at a local favorite restaurant (the Annapurna Café), and then my wife got a nice quiet night in a hotel room while I went out bouncing around in a dark goth-y club for a few hours. Sunday we had a lazy, slow morning, came back home, and that was that.

This weekend was a slow Saturday of chores and dozing in front of the Olympics. We’d watched the opening ceremony on Friday evening and, well, were more underwhelmed than overwhelmed.

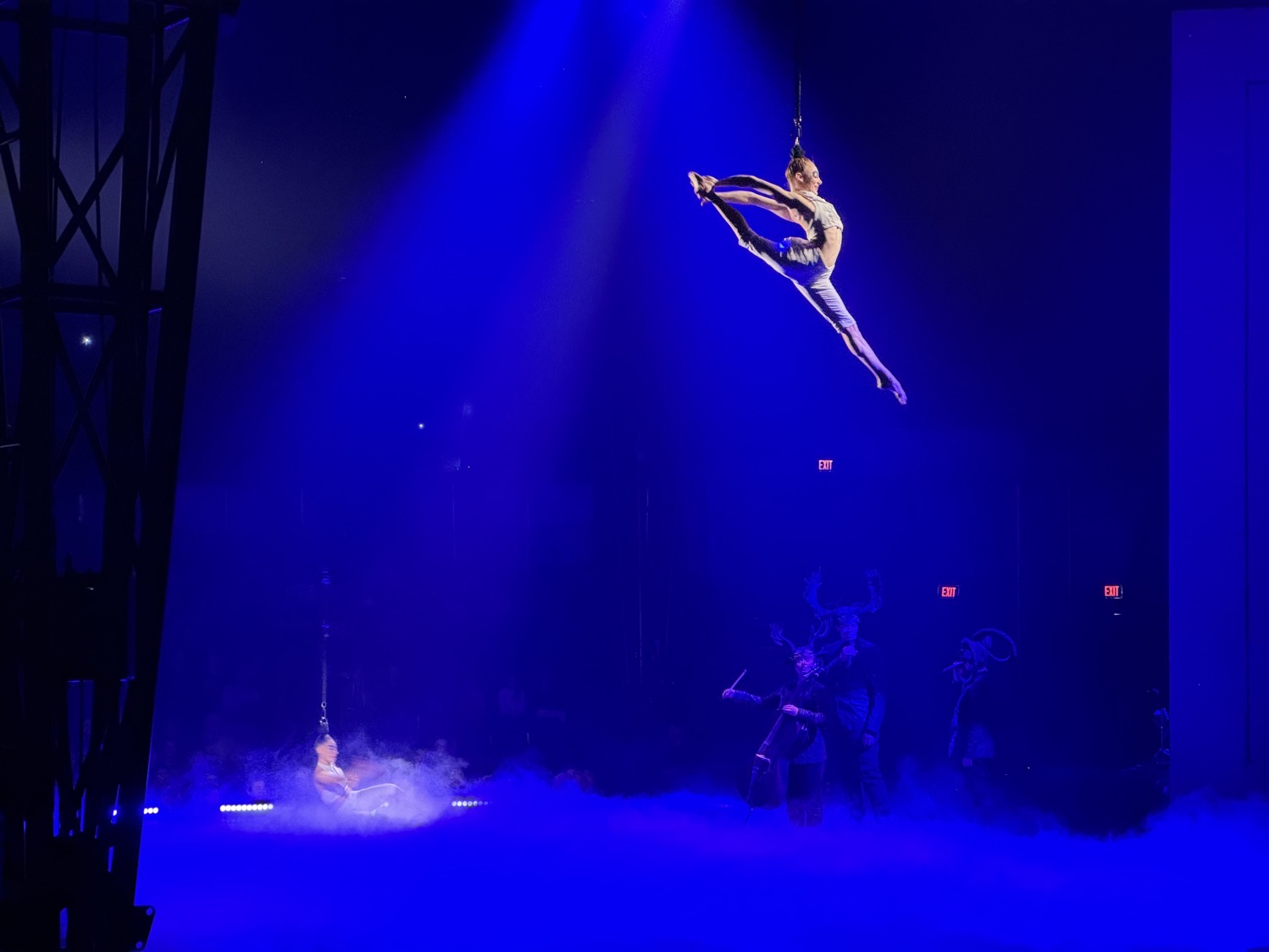

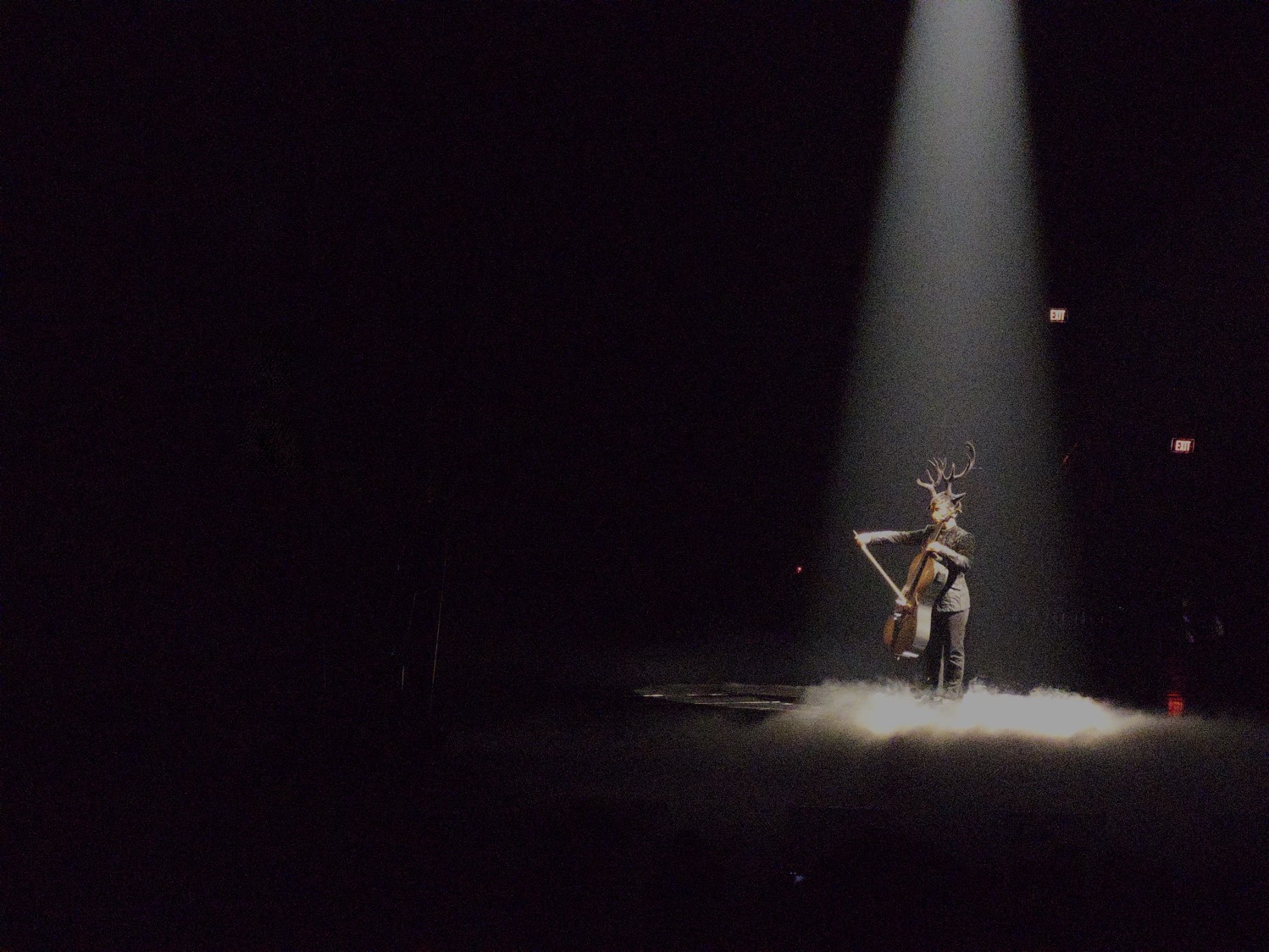

Today we went out to see Cirque du Soleil’s Echo, which just opened here. Really, really neat show — this was our first time seeing a CDS show, and it was totally worth it.

In addition to the little mini-review of the Winter Olympics opening ceremony already linked above, I also had a bit of a bit of a rant on Mastodon about modern Star Trek designing things that look neat rather than feel real. (I actually originally posted it on Bluesky, but it was on Mastodon where I actually got responses and engagement.)

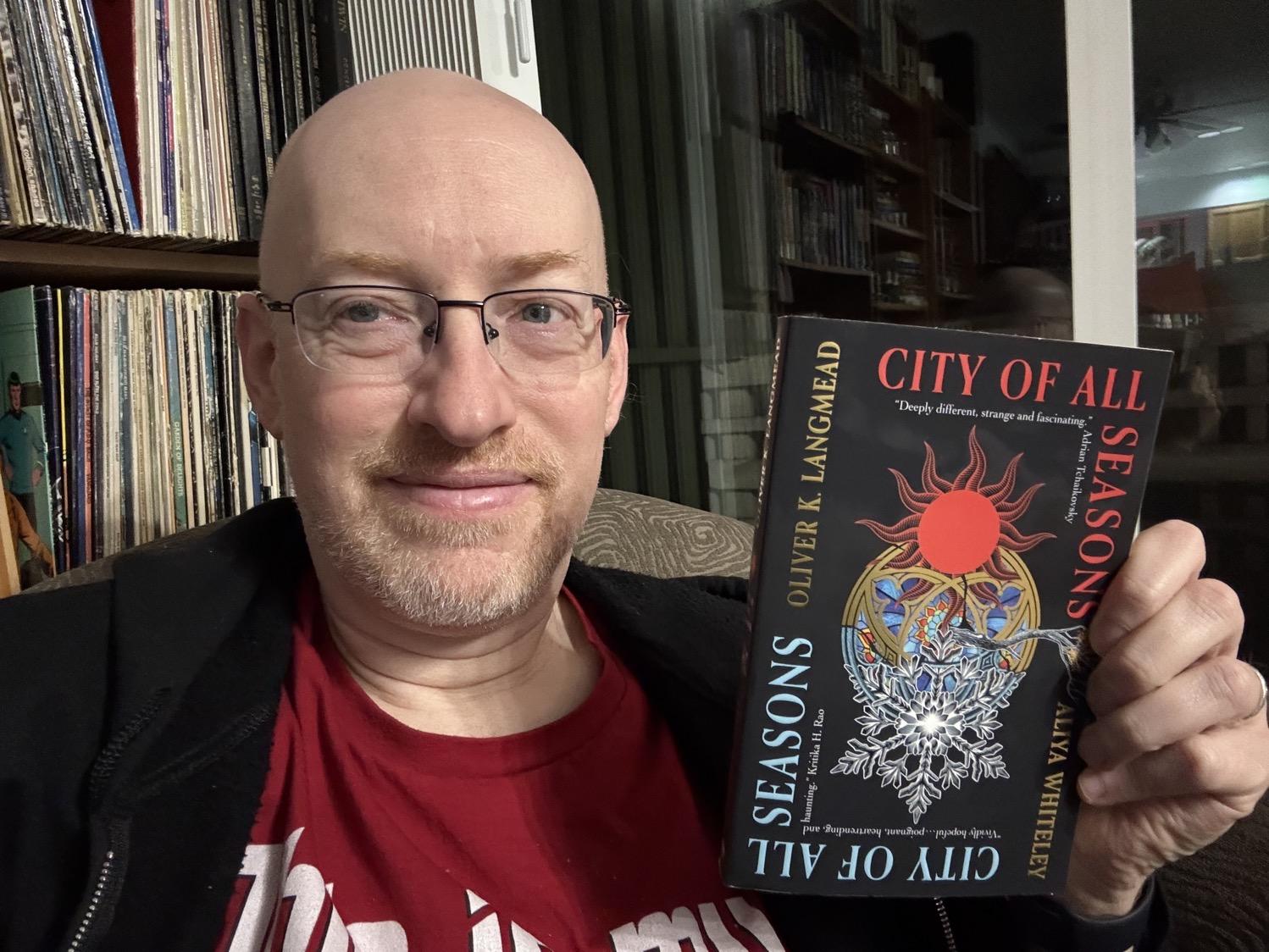

I finished my second of this year’s Philip K. Dick nominees, Oliver K. Langmead and Aliya Whiteley’s City of All Seasons, and have started my third, M. R. Carey’s Outlaw Planet.

We watched the one-off Muppet Show revival, and really enjoyed it. I’ve seen a lot of other people also saying how much they enjoyed it, so hopefully it does get picked up for a full revival.

I found a 2023 article where Consequence posted a list of their picks for the 50 Best Industrial Songs of All Time, and while like any such list, not every choice is one I’d make, it’s not bad. While reading it, I realized that I had most of the tracks on the list already, so I went on a small binge and picked up those I didn’t. So I now have a playlist to match the article, and have been enjoying it and the new additions to my collection.

Plus, these were released recently:

Accessible Social: “Accessible best practices for social media content: Learn how to create a more inclusive online experience one post at a time.”

Laura Kalgan: Accessibility for Everyone: A free edition of this 2017 book on accessibility. Some details might have changed, but accessibility best practices remain the same.

Adam Serwer in The Atlantic: Minnesota Proved MAGA Wrong (gift link; here’s an archive.is link in case the gift link ever expires): “I don’t know what the feds expected when they surged into Minnesota. …what they discovered in the frozen North was something different: a real resistance, broad and organized and overwhelmingly nonviolent, the kind of movement that emerges only under sustained attacks by an oppressive state. Tens of thousands of volunteers—at the very least—are risking their safety to defend their neighbors and their freedom.”

Daphne Carr at Pitchfork: Understanding the LRAD, the “Sound Cannon” Police Are Using at Protests, and How to Protect Yourself From It: “Short-term exposure to loud noise like the LRAD’s deterrent tone may cause a sensation of stuffed or ringing ears, known as tinnitus, which can cease minutes after the exposure or last for days. Other sound injury symptoms include headaches, nausea, sweating, vertigo, and loss of balance. Signs of more serious injury include vomiting and mucus or blood from the ears. Exposure to acute loud sounds can tear eardrums and destroy hair cells in the cochlea, which causes permanent hearing loss.”

Robert F. Worth at The Atlantic: Welcome to the American Winter (archive.is link of a paywalled original): “Again and again, I heard people say they were not protesters but protectors—of their communities, of their values, of the Constitution. Vice President Vance has decried the protests as ‘engineered chaos’ produced by far-left activists working in tandem with local authorities. But the reality on the ground is both stranger and more interesting. The movement has grown much larger than the core of activists shown on TV newscasts, especially since the killing of Renee Good on January 7. And it lacks the sort of central direction that Vance and other administration officials seem to imagine.”

Cheyanne M. Daniels at Politico: Third ‘No Kings’ nationwide protest planned for March: “The group behind the nationwide ‘No Kings’ protests are planning their fourth demonstration of President Donald Trump’s second term — and are anticipating even greater turnout than their earlier rallies.”

Sarah Jeong at The Verge: Best gas masks: “There isn’t a lot of reliable information out there about how to buy a gas mask, especially for the specific purpose of living under state repression. But hopefully after reading this guide you’ll feel equipped to make an educated decision.”

Mia Sato at The Verge: The rise of the slopagandist (gift link; here’s an archive.is link in case the gift link ever expires): “We mostly talk about it in the context of AI-generated material, but slop does not need to be synthetic — AI slop is just a subgenre of a larger type of content that is made quickly and cheaply and poorly. The same lukewarm financial advice peddled by thousands of literal talking heads on Instagram Reels is slop. Falsehoods and oversimplifications about breaking news or contentious celebrity drama that snowball to millions of views is slop. Engagement bait is slop. The president’s social media posts are slop. The main function of slop is to take something from you: your time, your attention, your trust. It is passive in that it requires nothing from viewers but to sit back and consume it. Slop is boring, repetitive, and often inexpensive to make — the natural evolution of an internet built for scale and ruthless optimization.”

Christian Paz at Vox: Minneapolis is showing a new kind of anti-Trump resistance (archive.is link of a paywalled original): “In the Twin Cities area, meanwhile, this activism is well-organized; but it’s not a traditional, anti-government protest movement of the likes we saw during President Donald Trump’s first term. Some have called this new model ‘dissidence’ or ‘neighborism’ — or, more traditionally, ‘direct action.’ As one organizer described what’s happening in the city, ‘it’s kind of unorganized-organized.'”

Just the Browser: “Just the Browser helps you remove AI features, telemetry data reporting, sponsored content, product integrations, and other annoyances from desktop web browsers. The goal is to give you ‘just the browser’ and nothing else, using hidden settings in web browsers intended for companies and other organizations.” For Google Chrome, Microsoft Edge, and Firefox.

Minifigure Scanner: Use this website on your phone to check which figure is in that blind bag before you buy it.

Book 4 of 2026: City of All Seasons by Oliver K. Langmead and Aliya Whiteley.

Second Philip K. Dick award nominee of the year. As such, not reviewed.

Book 3 of 2026: Casual by Koji A. Dae.

First Philip K. Dick award nominee of the year. As such, not reviewed.

Just a brief update to my prior Affinity by Canva Accessible PDF Output Test post. As Affinity recently updated to v3.0.2, I ran the test again.

The results are nearly identical; the only change I noticed was a regression: The footer text on the master page now appears once at the very bottom of the tag tree instead of being properly artifacted out. In the prior version of this test using Affinity 3.0.1, the footer text was properly omitted from the tag tree.

Updated versions of the test file and output may be downloaded if you’d like to play along at home:

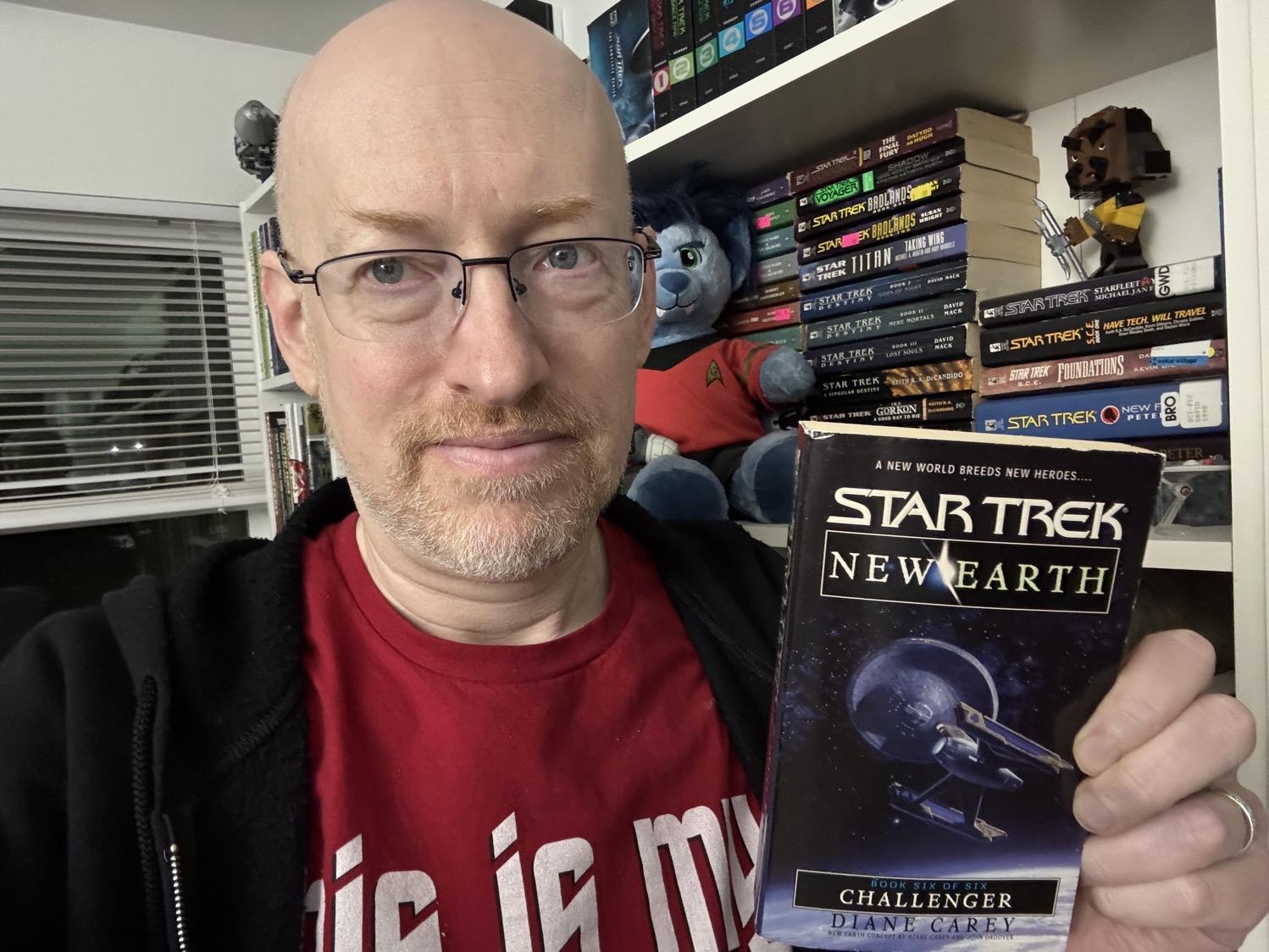

Book 2 of 2026: Challenger by Diane Carey: ⭐️⭐️⭐️.

The tone of this one was really odd. Much of it is centered on the main crew of another ship, which is fine in and of itself, but there was something about their interactions that was so flippant and irreverent that for me, it blew right past “different ship with a different, quirkier feel than the Enterprise” all the way to “how are these people functioning with each other, within Starfleet, or in the universe in general?” As a capstone to the series, it wrapped up all the major plot points well enough, but the odd tone was really off-putting for me.

Saturday night we went out to see one of the 40th anniversary theatrical showings of Labyrith. I don’t remember seeing it in the theater when it came out — I would have been 12, so right in the target range, but I have no memory of doing so — and it was a real treat to be able to do this. It holds up well!

Sunday we headed up to Seattle’s Cal Anderson Park for one of this weekend’s “ICE Out for Good” rallies. I’ve uploaded my full photo set to Flickr, as usual.

Niki Tonsky: It’s hard to justify Tahoe icons: “The main function of an icon is to help you find what you are looking for faster. ¶ Perhaps counter-intuitively, adding an icon to everything is exactly the wrong thing to do. To stand out, things need to be different. But if everything has an icon, nothing stands out.”

Casey Newton at Platformer: Debunking the AI food delivery hoax that fooled Reddit: “For most of my career up until this point, the document shared with me by the whistleblower would have seemed highly credible in large part because it would have taken so long to put together. Who would take the time to put together a detailed, 18-page technical document about market dynamics just to troll a reporter? Who would go to the trouble of creating a fake badge? ¶ Today, though, the report can be generated within minutes, and the badge within seconds.”

Stefano Marinelli: The Virtue of Finished Things: “I received an email yesterday morning. It was a thank-you note for one of the open-source tools I created and maintain. The sender explained how useful the software was for their specific needs, and as always, this brought me an immense sense of satisfaction. ¶ But at one point in the email, a question appeared – one that has become a recurring theme in the modern software world: ‘I notice there haven’t been any new releases for about ten months. Should I consider the project abandoned?'”

Teresa Duryea Wong at Quiltfolk: One Year After an Uncomfortable Choice for Best in Show: “This is a protest quilt. It was made by an artist whose day job puts her on the front lines of one of the most grotesque realities in America today. She is a teacher. ¶ What We Will Use as Weapons: A List of School Supplies is the title for this provocative work of art that features school supplies hurling toward the center on the front and an assault rifle on the back. This long, narrow quilt is the actual size and shape of a door. An outline of a human is stitched through the layers. On the front, the person is meant to represent a shooter, and on the reverse side, a teacher.”

Jacquelyn Jimenez Romero at the South Seattle Emerald: A Fistful of Loud: Seattle Neighbors Build Whistle Kits to Protect Immigrants From ICE: “‘By making noise, you bring visibility to what is happening on the street,’ said Kate Macfarlane, who started the WA Whistles project. ‘ICE relies on shock tactics and moving in very quickly … it turns [an] otherwise pretty silent abduction into a loud, highly visible opportunity for neighbors to rally.'”

WA Whistles: “Our purpose is to spread whistles throughout WA to help communities protect themselves against ICE.”

Joseph Cox at 404 Media: DHS Is Lying To You (archive.is link of a paywalled original): “At least four videos show what really happened when ICE shot a woman in Minneapolis on Wednesday. DHS has established itself as an agency that cannot be trusted to live in or present reality.”

Melissa Turniten at Fox9 KMSP: Minneapolis ICE shooting: Eyewitness accounts contradict ICE statement: “Minneapolis Mayor Jacob Frey calls the claim the shooting was self-defense ‘bullshit’ and is a ‘garbage narrative’ after seeing video of the shooting. Minnesota Gov. Tim Walz has also seen the video, saying ‘Don’t believe the propaganda machine. The state will ensure there is a full, fair, and expeditious investigation to ensure accountability and justice.'”

Jennifer Mascia at The Trace: How Many People Have Been Shot in ICE Raids?: “Using Gun Violence Archive data and news clips, The Trace has identified 16 incidents in which immigration agents opened fire and another 15 incidents in which agents held someone at gunpoint since the crackdown began. At least three people have been shot observing or documenting immigration raids, and five people have been shot while driving away from traffic stops or evading an enforcement action.”

Joseph Cox at 404 Media: Inside ICE’s Tool to Monitor Phones in Entire Neighborhoods (archive.is link of a paywalled original): “A social media and phone surveillance system ICE bought access to is designed to monitor a city neighborhood or block for mobile phones, track the movements of those devices and their owners over time, and follow them from their places of work to home or other locations, according to material that describes how the system works obtained by 404 Media.”

Andy Greenberg and Lily Hay Newman at Wired: How to Protest Safely in the Age of Surveillance: “Two key elements of digital surveillance should be top of mind for protestors. One is the data that authorities could potentially obtain from your phone if you are detained, arrested, or they confiscate your device. The other is surveillance of all the identifying and revealing information that you produce when you attend a protest, which can include wireless interception of text messages and more, and tracking tools like license plate scanners and face recognition. You should be mindful of both.”

Joanna Kavenna at The Guardian: Mass surveillance, the metaverse, making America ‘great again’: the novelists who predicted our present: “From Jorge Luis Borges to George Orwell and Margaret Atwood, novelists have foreseen some of the major developments of our age. What can we learn from their prophecies?”

Ari Anderson at The Stranger: What I Learned About the Future at Seattle WorldCon: “Like the standing on threshold of a cosmic portal, Seattle’s convention center buzzed with bards, fae, aliens, monsters, warriors and spaceships, far away planets and misty forests, innumerable stories of heartbreak and triumph, all tantalizingly within reach between the covers of a thousand books.”

Elizabeth Lopatto at The Verge: Tim Cook and Sundar Pichai are cowards: “Since X’s users started using Grok to undress women and children using deepfake images, I have been waiting for what I assumed would be inevitable: X getting booted from Apple’s and Google’s app stores. The fact that it hasn’t happened yet tells me something serious about Silicon Valley’s leadership: Tim Cook and Sundar Pichai are spineless cowards who are terrified of Elon Musk.”

Julia Shumway at the Washington State Standard: Federal judge blocks Trump election order, siding with Oregon, Washington: “A federal judge in Washington state on Friday permanently blocked the Trump administration from enforcing a 2025 executive order that sought to require voters prove citizenship and that all ballots be received by Election Day.”

Happy holidays (part two)!

Well, we wrapped up 2025…and as happy as we were to see 2025 end, 2026 is already looking to keep the dumpster fires burning bright.

At work, the week was fine. Back in the office this week, though as it was still in the holiday break, it was another pretty slow week. Next week classes start, so things will pick back up again. The slowdown is always nice, but it’ll also be good to have things back to normal after the holidays.

Here at home, we had a nice quiet New Year’s Eve. It was even a bit quieter than we expected, as there weren’t as many local unsanctioned fireworks as there have been in years past.

Out in the wider world, though, we all woke up one morning just a few days into the year to discover that the US had invaded Venezuela and abducted its president and his wife. Because…sigh. We are continuing to speed run becoming everything as a country that I was brought up being told that we weren’t. And even though the older I get and the more I learn, the more obvious it is how far we always have been from the ideals we claimed to uphold, it’s still mind-boggling to be where we are now.

As I said on Mastodon: “I’m confused: Is being a brown-skinned person accused of being involved with drugs something that gets you kidnapped and forcibly kicked out of the country or kidnapped and forcibly brought into the country?”

Though really, after what we saw of Trump in his first term and so far in his second, the only thing that’s really surprising me about all of this is how many people are just…going along with it (most notably Congress — especially, but not at all limited to, the Republican party — and the Supreme Court). The system of checks and balances has apparently given up trying to either check or balance, and that’s perhaps the most troubling part of all of this.

This week I recorded my responses to the current SFWA survey on AI use in the SFF writing/publishing industry, did my annual reading wrap-up for the year, and posted my resolutions for this year.

Finished my last book of the year, Rough Trails by L.A. Graf, and my first book of the year, Thin Air by Dean Wesley Smith and Kristine Kathryn Rusch, both parts of a six-book TOS-era Star Trek series.

We watched two movies over the weekend:

Mission Impossible: The Final Reckoning (2025): ⭐️⭐️: A particularly disappointing finale to the series.

NOTE: For regular readers (assuming there are any), a bit of clarification on how I link some items: I use archive.is for pages on sites that are paywalled (including sites that will only show content if adblockers are disabled) and for Substack pages (because Substack is another Nazi bar). Starting with this week’s post, I will also include links to the original pages, as not everyone has the same attitudes as I do about these things and may have subscriptions to the sites in question, not be as annoyed as I am at dealing with paywalls, ads, and the like, or have either accepted Substack as a “necessary evil” or are unaware of their problematic practices.

National Society of Tax Professionals: USPS Announces Changes to the Postmark Date System: “…while a postmark confirms the USPS possessed a mail piece on the date inscribed, that date does not necessarily align with the date the USPS first accepted possession of the item.” Potentially impactful in a number of important scenarios, including voting by mail. Undated informational page, but the rule took effect in November 2025.

Foz Meadows: Against AI (archive.is link of Substack original): “AI is unethical on a scale that SFF authors should be uniquely placed to appreciate, its evils mirroring metaphors that are older than our present civilization. AI is the cursed amulet, the magic mirror, the deal with the devil, the doppelganger that learns our secrets and steals our face; it’s a faerie illusion, leprechaun gold, the fox’s trick that gives rot the look of resplendence, the naked emperor parading with his cock out; it’s the disembodied voice that whispers let me in, the zombie virus that transforms the known into the unrecognizable, the corrupting fungi whose tendrils invade and poison. It’s the literal fucking One Ring, telling us that of course we’d use its power for good, compelling us to pick it up so that through us, it might do great evil.”

Chuck Wendig: My Open Letter to That Open Letter About AI in Writing and Publishing: “AI IS NOT INEVITABLE. ¶ The only strategy here is the sum total pushback against its uncanny horrors and its non-consensual intrusion into every corner of our world — it steals our content, guzzles our water, increases our power bills, is crammed into services we didn’t ask for it to be crammed into while also charging us more money for the “privelege.” There is no strategy here except to find the fields where the AI grows and metaphorically set them aflame. ¶ And shame and anger against corporate overreach is a powerful fire.”

Trekorama!: 3D walkthroughs of locations on various Star Trek ships, including the Enterprise 1701 (main bridge), 1701-D (main bridge, engineering, sick bay, Ten-Forward, transporter room, Picard, Data, Troi, and Worf’s quarters, and a shuttle), 1701-E (bridge), and Kelvinverse version (bridge and corridor), Defiant (deck one), Voyager (deck one, sickbay, transporter room, engineering, mess hall), Discovery (bridge, transporter room, mess hall, and corridor), and Klingon Bird of Prey (bridge), plus the real-world ISS.

David Reamer at the Anchorage Daily News: Termination dust: Its history, evolution in meaning and possible origin (archive.is link of a paywalled original): “…the history and evolution of termination dust as a turn of phrase offers education, enlightenment and entertainment. Over the decades, there have been changes in meaning and connotation. Throughout those years, it remains a significant detail of local history, a widely recognizable bit of slang whose lore maps closely against that of the town itself.”

Robin Young and Emiko Tamagawa at WBUR: ‘Wake Up Dead Man’: Rian and Nathan Johnson on blending mystery and faith in new ‘Knives Out’ movie: Brief but interesting interview touching on the religious motifs in Wake Up Dead Man.

John Scalo: Was Daft Punk Having a Laugh When They Chose the Tempo of Harder, Better, Faster, Stronger?: “I think our helmet-clad robot friends might have been making a little joke that we’ve apparently all missed. The BPM of Harder, Better, Faster, Stronger is actually 123.45.” Fun bit of music trivia, plus a bit of a peek at the difficulties of having a computer do something that seems relatively easy for humans; in this case, determining a song’s tempo.